Donald Rumsfeld is a controversial character. An arch neoconservative, he played a central role in America's controversial interventions in Iraq. But he was, undoubtedly a smart dude. He was the youngest US Secretary of Defense (and the oldest) as well as CEO of several companies.

One of the things he’s known for is a comment he made at a White House press briefing in 2002:

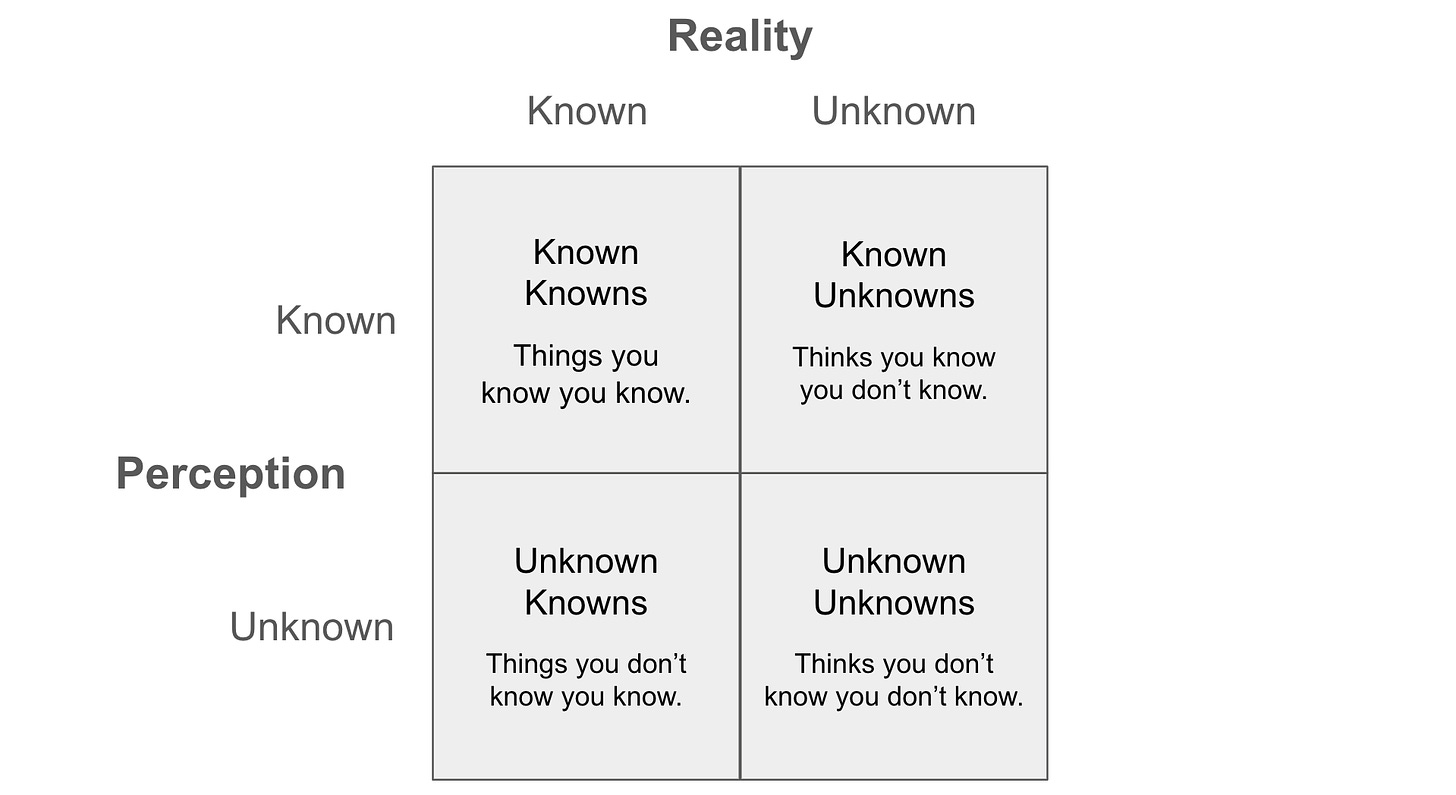

Reports that say that something hasn't happened are always interesting to me, because as we know, there are known knowns; there are things we know we know. We also know there are known unknowns; that is to say we know there are some things we do not know. But there are also unknown unknowns—the ones we don't know we don't know. And if one looks throughout the history of our country and other free countries, it is the latter category that tends to be the difficult ones.

This statement has even got it’s own Wikipedia page, and inspired the name of Rumsfeld’s biopic.

He made the comments in relation to geopolitics and foreign policy — but I've found this an incredibly useful framework in the field of product management to help make sense of information — or lack of it.

1. Known Knows: Things you know you know

This is the easy one. Lets take core product metrics like revenue, MAU, retention etc. You've likely got these instrumented and on a dashboard. You know where to go to get the latest information. You probably know the numbers off the top of you head. You know you know them.

2. Known Unknowns: Things you know you don't know.

Perhaps there's been a drop in one of your metrics, but you don't know why. You're looking at your retention charts and you know new-user retention is X, but you don't really know why. But you know you don’t know. And when you know you don't know something - it’s easy to identify actions you can to take to remedy the situation.

Perhaps you need to add more logging to plug gaps in your data. Perhaps you need to do some user research to understand the patterns you're seeing in data. And when you take these actions, you’ll turn known unknown into known knowns.

3. Unknown Knowns: Things you don't know you know.

It’s a bit counterintuitive, but I think of this as things you have the data or customer insights lying around - but you’re not using them. A good example is a dashboard built by your old data scientist - or a research deck from before your time as the team’s PM. Or there’s a special cut of your existing data that you’re not using that would reveal some critical insight - like if you looked at a country-cut of your MAU charts. Before you go commissioning new data or new research, it’s best to take a look at what you may already have to save yourself the time and effort duplicating work.

4. Unknown Unknowns Things you don't know you don't know.

This is where the wicket gets sticky. As a PM part of your job is to anticipate and remove blockers. That’s easy with risks you’ve identified — but it’s the unexpected that can really mess things up.

That stakeholder you didn’t know was a stakeholder. That requirement no one told you was a requirement. That legal approval you needed but didn’t know about. That team who’s goals you didn’t realised conflicted with yours. The unexpected release of a product by competitor.

Complacency is risky in any situation. If you focus on the things you know, or the things you know you don't know, what are you missing?

Moving through the Rumsfeld Matrix

As a PM who’s accountable for creating a customer or business outcome, you want to maximise your chances of success by reducing your risks. One way to help with that is to use the Rumsfeld Matrix.

Take steps to turn unknown unknowns into known unknowns.

Take steps to turn known unknowns into know knowns.

Tackling Unknown Unknowns

What can you to to turn unknowns unknowns into known unknowns? Well, you’ve already done the first step.

….stay with me here….

You now KNOW there are unknown unknowns.

Here’s some activities you can perform to turn unknown unknowns into known unknowns.

1/ The Pre-mortem

We all known what post-mortems are — a retrospective at the end of some project about what went well, what didn’t, and determine what we should do differently next time.

A pre-mortem is exactly what you’d expect from the name: you and your team sit down and thinks about all the things that could badly (or go well!) on a project, and make a plan for handling them. The first phase of a pre-mortem is about identifying things to make a plan for — and it’s the coming together of a group of people with an explicit goal of identifying and cataloging things that turns unknown unknowns into known unknowns. Each person bring a different set of skills and experiences — something which may be a known unknown to them, might be an unknown unknown to the project as a whole.

The 2nd phase, the “making a plan” part, is about turning this list of now-known unknowns into manageable risks, known knowns.

Back in 2020 I was working on a set of projects which were somewhat controversial, which also had a high degree uncertainty in how they’d impact a complicated information ecosystem. While a few folks were fundamentally opposed to what we were planning, the majority of people raising objections were expressing worry that we’d perhaps not fully thought through the implications. So, we performed a pre-mortem. We brought together a multi-disciplinary team - everyone from engineering and design to legal, public policy, and communications. It was this broad group that identified and catalogued upwards of 20 risks. A few of these were previously known, but the exercise threw up a bunch more that we’d not been thinking about. Once we’d written them down, we could put a plan in place to address them.

2/ The Red Team

Security systems are especially vulnerable to unknown unknowns. You can design systems to protect against risks you know about - but what about those you don’t?

A common solution is to perform a red team exercise.

“Red teaming” is where you setup a group of people who pretend to be the enemy. They attempt to gain entry to a secure system (physical, digital, both) by attempting to find weaknesses while avoiding it’s strengths (sensors, triggers etc). The Red Team can then report back on any gaps they find such that the system can be made more robust.

The concept of “red teaming” originates from the cold war (blue=USA, red=USSR), and is common in security and integrity strategies, but it’s applicable more broadly.

When you’re developing a product, what are all the ways it could be misused? What are all the ways it could break? Traditional QA is about ensuring what you’ve built works as expected — but the best QAs TRY AND BREAK STUFF. They upload huge files; they hit refresh 50 times a second; they intentionally break the network. They look for unknown unknowns.

Summary

As my old boss once said “assumption is the mother of all fuckups”.

What I like about the idea of unknown unknowns is that once you know about them, you can tack action to turn them into known unknowns — before they come and bite you on the arse.

Is it a story how You, guys, silently moved all the development forces from all the Maschine ( ITs according to Your written article on NI site) the support - which, BTW, costs half a thousand $, and simply got me OFF 2 years without a support? AND THEN, launching 3rd MAschine, You fuckers, did a theft - MK3 which YOU DID NOT SUPPORTED FOR YEARS AS YOU SHOUD - noticed end of support and maschine 3 soft became paid for me - so, , DEAR PRODUCT MANAGER - What You shgould do first - You should convince your board to prolong end of supports and provide updaes for those users, whom you, fuckers, ab andoned. And herre’s and dvice - think thice before You write something - not only dumbassses read You, professionals from the industry reads as well. IT’s such a stuupid text You wrote - such an amauter… oh…. no surprise You worked in a leprosorium with MEta - prodicts with always failure relase cycle…

Don't forget to write it down in Your linked in boi, You, Granbd MAster of How I Personally Earned Worst REputation as a CPO. There is no reason anymore to post any article or document, now - every pro sees your “professional” skill level - its surprisingly weak. Wait, no. No surprise, actually - sissy productr movements in Kontakt are understandable, now

I’d love to hear your thoughts on _how_ good PMs move effectively, and efficiently, from uncovering Unknown Unknowns to prioritising and solving Known Knowns.

I often come back to Wald’s work in WW2 as a great example of how XFN consultation can help turn the problem over, and avoid ‘Known Unknown’ bikeshedding—focusing on learning more about the problems that fit within our worldview, at the expense of a wider and potentially more impactful framing.

But we have to trade that off against velocity and ‘getting shit done’ and, while experience breeds an intuition for anticipating dead-ends, rabbit-holes and diminishing returns, I wonder if there are any more tangible techniques or models…?